@Maatdeamon

You can use LOADACCUM to load parameter files into a global accumulator. And the loaded global accumulator can invoke an expression function to do the scoring.

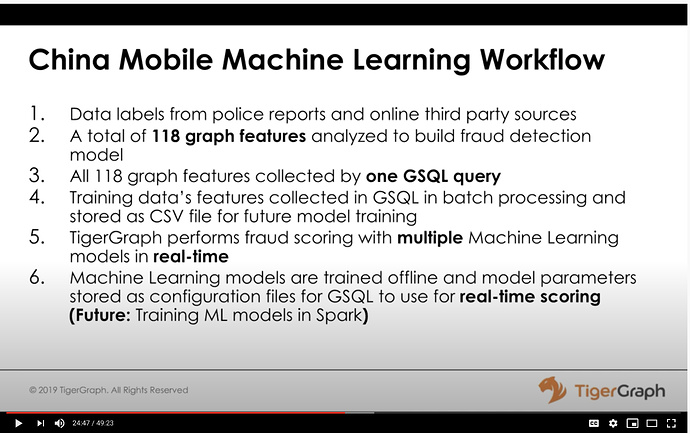

E.g. below is a query we used to do scoring. TigerGraph is used to collect graph feature, and we got the trained classifier parameter from external ML tools, and load them into global accumulators, and for a new phone, we collect its graph features, and called the user-define scoring function with the classifier parameters and the newly collected features.

CREATE QUERY scoringMethod(vertex phoneId, string inputPath = “/tmp/lgweight.configure”, string internalClusterFileName = “/tmp/kMeanWeight_internal.csv”, string externalClusterFileName = “/tmp/kMeanWeight_external.csv”, bool reloadWeightInfo = false, string configFileName = “/tmp/collectFeatures.config”, bool reloadConfig = false, int numOfTopFriends = 5, float abnormalThreshold = 0.5, float advertisementThreshold = 0.5, bool usePrint = true) for graph testGraph returns (ListAccum)

{

TYPEDEF tuple<float weight, float scale, float mean> ParamInfo;

ListAccum @@featureList;

ListAccum @@scoreList;

int isExternal = 0;

int fraudFlag = -1;

static ListAccum @@paramInfo0;

static ListAccum @@paramInfo1;

static ListAccum @@paramInfo2;

static ListAccum @@paramInfo3;

static ListAccum<ListAccum > @@InternalCluster;

static ListAccum<ListAccum > @@ExternalCluster;

if (@@paramInfo0.size() == 0 or reloadWeightInfo == true) {

@@paramInfo0.clear();

@@paramInfo0 = {loadAccum(inputPath, $0,$1,$2,",", false)};

@@paramInfo1.clear();

@@paramInfo1 = {loadAccum(inputPath, $3,$4,$5,",", false)};

@@paramInfo2.clear();

@@paramInfo2 = {loadAccum(inputPath, $6,$7,$8,",", false)};

@@paramInfo3.clear();

@@paramInfo3 = {loadAccum(inputPath, $9,$10,$11,",", false)};

}

if (@@InternalCluster.size() == 0 or reloadWeightInfo == true) {

@@InternalCluster.clear();

@@InternalCluster = loadClusterInfo(internalClusterFileName);

@@ExternalCluster.clear();

@@ExternalCluster = loadClusterInfo(externalClusterFileName);

}

//call another gsql query to collect features

@@featureList = collectFeaturesR(phoneId, configFileName, reloadConfig, numOfTopFriends, false);

//obtain the last element of featureList which indicates isExternal

isExternal = @@featureList.get(69);

//scoring by calling an user defined expression function.

score(phoneId,

@@featureList,

abnormalThreshold,

advertisementThreshold,

@@paramInfo0,

@@paramInfo1,

@@paramInfo2,

@@paramInfo3,

@@scoreList);

//set the initial scamCluster as -1, which means not a valid scam

@@scoreList += -1;

//Get the fraud flag out

fraudFlag = @@scoreList.get(0);

if (fraudFlag == 3) {

if (isExternal == 0) {

updateScamClusterFlag(@@featureList, @@InternalCluster, @@scoreList);

} else {

updateScamClusterFlag(@@featureList, @@ExternalCluster, @@scoreList);

}

}

if (usePrint) {

print @@scoreList;

}

return @@scoreList;

}